Many Leap Ambassadors have been advocates for getting government to fund what works for decades. Yet some are deeply skeptical, while others are modestly optimistic, that Social Impact Bonds (SIBs) and Pay for Success (PFS) could catalyze a greater emphasis on performance-based public funding. So far, there is limited evidence that government agencies commissioning SIBs have, as Daniel Stid put it, “the wherewithal to design, negotiate, oversee, and execute these schemes.” However, as Brad Dudding explained, “If SIBs are implemented with rigor, the model could lead to fundamental changes in how government works by placing an emphasis on funding high-performing, evidence-based practices.” At the very least, SIBs are helping move performance to center stage.

Takeaways

If SIBs are to fundamentally change how government works, ambassadors recommend giving greater emphasis to the following five areas:

1) Developing government agency wherewithal. PFS funding models aren’t new; SIBs are the latest iteration. Government agencies at every level have struggled to make outcomes-based funding work for decades. We must ensure that those commissioning SIBs have, as Daniel Stid put it, “the wherewithal to design, negotiate, oversee, and execute these schemes.” This long‐term proposition will require leadership with, as Daniel wrote in a paper for a Federal Reserve Bank of San Francisco publication, “the drive and public sector management chops needed to transform how human services are funded and delivered in this country.” Fortunately, there is already evidence that this is possible. Brad Dudding reports that Center for Employment’s (CEO) PFS partnership with the New York State Department of Corrections and Community Supervision “has been downright innovative in how government and service providers can work together in pursuit of a common goal.” Molly Baldwin says Roca has had a similarly positive experience which she believes will help government move toward greater transparency and stronger partnerships.

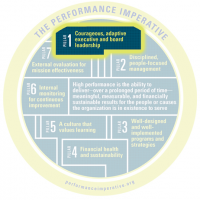

2) Selecting high-performing nonprofit organizations. Best practices and evidence‐based intervention models are only as good as the organizations implementing them. Nonprofits selected to receive SIBs should demonstrate “the ability to deliver—over a prolonged period of time—meaningful, measurable, and financially sustainable results for the people or causes the organization is in existence to serve” (high performance, as defined in The Performance Imperative).

Since leaders, management, and other factors change over time, selection should be based on a forward-looking assessment (e.g., do they have the ability and are they equipped to deliver what is proposed), rather than a rear-view mirror judgment of what they’ve done in the past.

3) Restricting use of the term “impact.” Following the terminology of evaluators, we should be specific about the definition of the term “impact,” which is often confused with “very long‐term outcomes.” As David Hunter explained, for evaluators, outcomes can only be considered impact when there is “a scientifically acceptable level of proof that they are the result of a given intervention and not the result of some other factors.” In other words, outcomes delivered over a long period of time may or not be impactful. We can only know a service or program has had impact when we have proof that it has made a meaningful difference beyond what would have happened anyway.

And thereby, clarifying what constitutes an outcome. Before assessing whether a program or service is having an impact, we must be clear about what we mean by an “outcome.” Too often, leaders fail to draw a clear distinction between outputs (e.g., meals delivered, youth tutored) and outcomes (e.g., meaningful changes in knowledge, skills, behavior, or status). Again, following the lead of evaluators (h/t David Hunter), an outcome is some kind of change that:

- Is meaningful

- Has clear indicators specifying what to assess in order to measure it

- Is observed and measured using valid and reliable methods

- Is sustained over a reasonable length of time and

- Can be logically linked to the activities of the program, service, or intervention under consideration.

This isn’t just semantics. As Molly Baldwin put it, “Anything and everything we can do to disrupt traditional government grants for outputs is critical if we are ever going to change anything.”

4) Ensuring impact as the payment metric and external evaluation to validate results. Most SIBs currently operating in the U.S. have outside evaluators (this is not the case outside the U.S.). Investors don’t get paid unless evaluators scientifically validate that a program or service has a positive net impact. However, other SIBs under consideration or in development are increasingly trending toward using outcome measures rather than net impacts as the payment metric. To inspire the public’s trust, government agencies must show that they want to know to what extent, and for whom, the programs and services in which they are investing public dollars are making a meaningful difference beyond what would have happened anyway. This requires rigorous, independent validation of results to determine net impact. However, it does not mean dismissing administrative data; internal monitoring should always complement outside evaluation.

5) Thinking long-term SIBs are not the end game. Getting government to fund high-performing organizations that deliver evidence‐based programs is the end game. To this end, Paul Carttar argues, “before endorsing SIB deals, citizens should demand that sponsoring governments unambiguously commit to adopt proven programs and hold themselves accountable for realizing the expected benefits.” When CEO’s SIB deal is finished, Brad Dudding explains that CEO does not want a new SIB arrangement. “What we really want is for government to double down on what works by creating a new type of performance-contracting vehicle which maintains and further scales the intervention in an ongoing way.” If SIBs are to make a lasting difference, supporters should focus as much on post‐deal architecture as the original deals.

Like with any new model, there are lovers, haters, and doers. Some SIBs will meet all of the criteria we lay out above; others won’t but may still help shift minds toward a culture of performance. As a community, we can contribute by learning from the doers and amplifying their lessons as we work toward high performance for the people and causes we serve.

References

Throughout the discussion, ambassadors pointed to several useful references for better understanding of what’s at stake in this debate:

- Learning from Experience: A Guide to Social Impact Bond Investing – MDRC president and Leap Ambassador Gordon Berlin shares insights from implementing the nation’s first SIB at Riker’s Island and lays out four tensions SIBs architects must address.

- “Pay for Success Is Not a Panacea” – Daniel Stid’s assessment for the Federal Reserve Bank of San Francisco’s Community Development Investment Review.

- “Using Social Impact Bonds to Spur Innovation, Knowledge Building, and Accountability” – A vision for SIBs that moves beyond saving money by David Butler, Dan Bloom, and Timothy Rudd of MDRC for the Federal Reserve Bank of San Francisco’s Community Development Investment Review.

- Urban Institute’s Pay for Success Initiative – Announcement of multi‐disciplinary team of researchers and practitioners and supported by the Laura and John Arnold Foundation (shared by Mary Winkler).

- Video: “The Future of Pay for Success with OMB Director Shaun Donovan, Civic Leaders, and Innovators” – Expert panel discussion reflecting on the current state of Pay for Success, lessons learned from the earliest pilots, and the future of this field (pointed to by Mary Winkler).

- “Assessing Nonprofit Risk in PFS Deals” – A framework to guide nonprofits in effectively assessing risk and opportunity in Pay for Success contracts, by CEO and Executive Director of the Center for Employment Opportunities Sam Schaeffer (shared by Brad Dudding).

- “After Pay for Success: Doubling Down on What Works”– Article by CEO and Executive Director of the Center for Employment Opportunities Sam Schaeffer, Vice President Advisory Services at Social Finance Jeff Shumway, and Director of Social Finance Caitlin Reimers Brumme (shared by Brad Dudding).

- Isaac Castillo’s “Who Would You Fund?” exercise Illustration of collecting outcomes with an emphasis on the importance of comparison data (shared by Isaac Castillo).

Acknowledgments

Our thanks to Michael Bailin, Molly Baldwin, Ingvild Bjornvold, Paul Carttar, Sam Cobbs, Brad Dudding, David Hunter, Kris Moore, Paul Shoemaker, Daniel Stid, and Mary Winkler for sharing their insights.